AI homework – Let’s redefine what homework is and what it’s for

We can’t put the AI genie back in the bottle, so it’s time to redefine what homework is, and what it’s for, suggests Anthony David…

- by Anthony David

- Executive headteacher, consultant, author and leadership coach

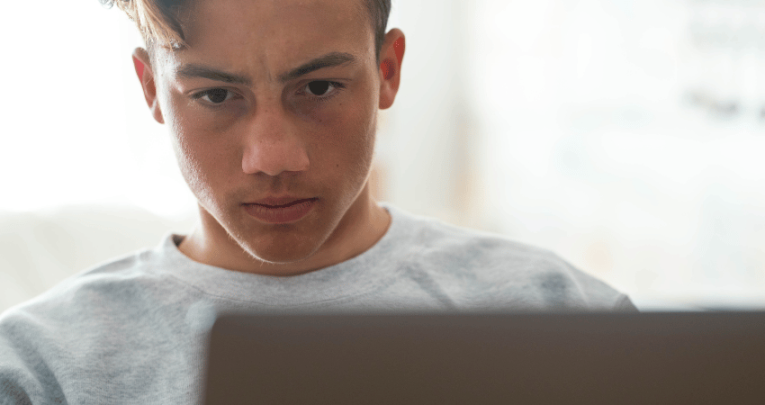

I sometimes think back to the days when ‘plagiarism’ meant copying a paragraph from Wikipedia and hoping nobody would notice. It feels almost quaint now. With the AI genie now truly out of the bottle, the ground has shifted under our feet when it comes to homework.

We’ve spent years building clear expectations around academic honesty, original thinking and the value of struggle. Then along comes a tool which, in seconds, can produce an essay, translate a passage, condense a chapter or write a convincing reflection. It’s all become too easy.

In this brave new world, the obvious question to ask is ‘Has homework had its day?’ If students can hand in polished, fluent and technically correct work without actually needing to engage with the learning process, then what’s purpose of setting such tasks in the first place?

If the work can be done for them, rather than by them, where does that leave us? It’s tempting to see the landscape currently before us as a kind of lawless wild west – but I don’t think it is.

If anything, the past two years have forced us to rethink what really matters when it comes to homework, and pushed many of us to design tasks that can reveal true understanding in a much more reliable way.

The limits of AI when it comes to homework

A key misconception around AI is that it ‘replaces thinking’. It doesn’t. It imitates, it predicts, it assembles, but it doesn’t know the child, the lesson or the nuances behind how a student reached an idea.

Sure, it can write a competent paragraph as part of a GCSE poetry comparison – but it can’t recreate the conversation that took place in your classroom that morning, or pass comment on a misunderstanding you deliberately unpicked with the class.

And this is where I think the narrative around AI and plagiarism must shift to. If a homework task can be completed convincingly by an AI tool, without the student truly understanding the content, then the issue isn’t the child’s dishonesty – it’s that the task is no longer fit for purpose.

That’s not a criticism of teachers; more a recognition that the world has changed faster than school systems usually do.

At the heart of the matter is this quandary: are we assessing the product, or the learning that led to the product?

When AI is used as a shortcut, what’s lost is the process. Our challenge, therefore, becomes one of designing tasks where the process remains visible at all times.

Practical approaches that work

There are, however, some strategies that teachers have been trying with surprising success – including the following…

Anchor homework to the classroom, not the internet

When a task requires students to reference your explanation, your model or their own notes, AI tools will immediately become less helpful to them.

A prompt such as ‘Using the method we practised in period four, solve these three equations and explain which step you found hardest and why’ will produce work that reflects a student’s actual thinking.

AI can certainly try to invent an explanation, but what it can’t do is replicate a moment from your lesson.

Set ‘micro-reflections’ rather than lengthy essays

Instead of tasking students with producing polished extended pieces of writing, ask for short, structured responses that demonstrate how they have processed the material. For example:

- ‘What was the most surprising thing you learnt today, and why?’

- ‘Rewrite today’s key idea in your own words and give one example that wasn’t in the textbook’

- ‘What mistake did you spot yourself making and how did you fix it?’

AI could generate a set of answers, but students will typically reveal their understanding (or lack of it) through the personal details they include.

Ask for evidence of thinking

We’ve reintroduced rough workings, annotated pages, mind-maps and voice notes into certain subjects. Asking a student to submit three photographs of their process, or a 30-second audio reflection alongside their work, gives you something that AI can’t easily fake. This is especially effective in subjects like English, science, DT and maths.

Set choice-based tasks

AI can often struggle with tasks that involve expressing personal preferences, reasoning or lived experience.

A history task that asks students to ‘Choose the argument you think is strongest and justify it’ shifts the focus away from producing a ‘perfect’ paragraph to revealing the student’s own thinking.

Actually use AI (as a tool for learning, and not learning avoidance)

Students will often turn to AI because they feel stuck, overwhelmed or worried about getting tasks wrong. If we normalise AI as a thinking partner – as something that can summarise passages, generate examples or explain concepts more simply – then we take away the secrecy.

In our schools, we explicitly teach students how to use AI ethically for specific tasks. These approved uses include:

- checking for understanding

- improving revision efficiency

- generating practice questions

- rephrasing something they didn’t grasp in class

The rule we teach is simple. AI may support your learning, but it cannot stand in for your learning.

What about AI detection when it comes to homework?

Teachers often ask whether AI-detection tools are the answer. I take a cautious view. Detection will improve, but it will never be perfect, and the risk of generating false positives is too high to rely solely on automated judgements.

Instead, I approach detection tools as a prompt. If they flag something, that’s a sign to talk to the student, not a cue to to jump to conclusions.

The more important point to bear in mind is that if we can design homework that makes students’ learning visible, detection tools become far less necessary.

Our students’ voices, the misconceptions they have and the specific steps they’ve taken can become part of the work itself.

For me, the real opportunity in the AI era is to shift our existing culture around academic honesty. Rather than framing plagiarism as a punishable offence, we can instead position AI misuse as a misunderstanding of what homework is for.

Unexpected benefits

When students see homework as an extension of learning, rather than as a performance, they’re far more likely to use AI constructively.

The conversation can start to look like this: ‘I can see you used AI to write this paragraph. Tell me what you understood and what you didn’t.’

That doesn’t close doors; it opens them. Our overall aim here shouldn’t be to catch students out, but to help them grow into the kind of thinkers who don’t see the need to take such shortcuts.

AI has given us some unexpected benefits too. It’s forced us to strengthen the link between instruction and assessment.

It’s encouraged more extensive use of formative feedback, a greater emphasis on low-stakes practice and increased transparency around how learning develops.

AI has pushed teachers to focus more on knowledge retrieval, application, misconceptions and reasoning – the very things that matter most in long-term retention.

Perhaps most importantly of all, it’s invited us to talk openly with students about the nature of thinking. About all the messy parts, such as not hiding the draft stages and what it means to learn deeply, rather than perfectly.

In a world of AI, homework is evolving

The age of AI hasn’t killed homework. It’s made us more precise, thoughtful and realistic about what we’re actually trying to see when we set tasks.

AI isn’t going away. Our task now should therefore be to build an ecosystem in which students learn to use AI responsibly, and teachers feel confident designing meaningful assignments.

This way, academic honesty can become part of how we help children grow, rather than just something we need to constantly police.

Homework still matters, but its purpose has changed. It’s no longer about producing the neatest essay, but about showing the journey of learning – which is something no AI can convincingly fake.

In many ways, all that’s really happened is that we’ve evolved into the next stage after Wikipedia-grabbing. We pivoted then, and in all likelihood, we’ll pivot again.

Anthony David is an executive headteacher working across Central and North London, and author of the book AI with Education – How to Amplify, not Substitute, Learning.