If You Predicted Your Students’ GCSE Results This Year, You Were Plain Lucky – And Here’s Why

We might as well be reading tea leaves or trying to make sense of chicken guts, insists David Didau

- by David Didau

- Writer, speaker & senior lead practitioner for English at OAT Visit website

Every year schools feel under enormous pressure to predict what GCSE grades their students are likely to achieve. In the past, this approach sort of made sense.

Of course there was always a margin for error, but most experienced teachers just knew what, say, a C grade looked like in their subject. And, when at least half of students’ results were based on ‘banked’ modular results, the possibility of making meaningful, accurate predictions was so much greater.

Now however, many of the certainties we may have relied upon have gone. For the new GCSEs, Ofqual has worked hard to ensure that it’s more or less impossible to predict what grade a particular pupil is likely to get, and for 2017 candidates, we had no way of knowing what the grade boundaries would be in advance of the results being published. If you managed to successfully predict your students’ results this year, you were lucky.

This doesn’t mean that there are no certainties at all, and that nothing can be predicted. Nationally, we were able to be fairly certain about what would happen this year. Ofqual confirmed that students would not be disadvantaged for being the first cohort to take the new specifications.

For the new maths and English exams in particular, we knew that the new grade four would be roughly similar to the old C grade and the new grade 7 akin to the old A grade.

We also had a good idea of the likely distribution of grades. No matter how hard the exams, or how poorly pupils did, 70% of those taking the new exams would get a grade 4 or above and, the percentage awarded a grade 7 or above would be 17% in English and 20% in maths.

No blame – or credit

But such predictions are far harder to make at a school level. Although there’s been a predictable upward trend for GCSE results ever since their inception, individual schools’ results have always gone up and down. When this happens we want to look for someone to blame or take credit for such swings – but in actual fact, a lot of this movement is down to natural volatility: they go up and down and it’s got nothing to do with you.

A dip is not your fault and an increase is not something to pat yourself on the back for. In the past, for subjects like English language, volatility has been as high as 19% up or down from previous years for individual schools. If you are making predictions about the grades of individual students in this climate, you’re just guessing. Although your guess might turn out to be lucky, it’s based on a house of cards assembled on the sandiest of shores.

There is no assessment that accurately allows us to predict future performance, so when we say things like, ‘He’s a strong B’ or, ‘She’ll definitely make her target grade,’ we might as well be reading tea leaves or trying to make sense of chicken guts. The problem is, because you’ve input some data, your guesses feel a bit more ‘mathsy’ than that. But, because the data is based on made up numbers, it can’t tell you much at all.

Think of it like this: if I give a class an assessment and find that 75% get a passing grade, and then use this information to predict what grades the students will get in a few months time, it might feel like I’m doing something robust and sensible, but I’m not. It would be essentially the same as finding out what each student watched on telly last night and attempting to predict what channel they’re likely to be watching in a few months’ time.

The error will be compounded by how many times you repeat the exercise. The more data you collect and the more you try to analyse it, the less you are likely to perceive.

Looking at the past leads us into believing we can control the present and predict the future.

Progress, not prediction

So, what should we do? It’s all very well to accept that predicting grades isn’t possible, but if you’ve got an Ofsted inspector breathing down your neck asking for the impossible, what can you do? Fear not, help is at hand. In a recent speech, new Ofsted boss, Amanda Spielman, said this:

“At Ofsted, we are all too aware of the challenge of interpreting data wisely and placing it in its proper context. And we are particularly conscious of the changing exam landscape and all the increased volatility of results in periods of transition.

We know, for example, that it is particularly difficult to predict outcomes this year in the new English and maths GCSEs … no one in schools – however good – can predict Progress 8 this accurately.

So … I have been really clear that our inspectors aren’t expecting these predictions. Instead, we will be looking at whether schools know that pupils are making progress and, if they are not, whether the management team is taking effective action.”

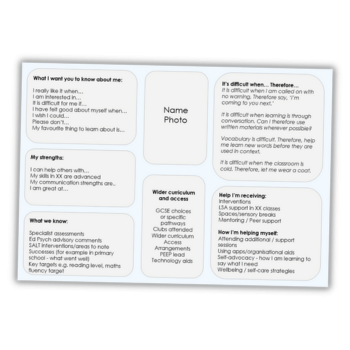

No one is expecting schools to predict results; all you’re expected to do is to know how your students have performed in the past, and what you need to do to ensure they make progress. The difference might not seem immediately obvious, but this is a much more sensible approach to data.

Let’s re-run the scenario from earlier where I found that 75% of students achieved a ‘passing grade’ in an assessment. Instead of using this information to predict the future, I should instead look at the elements that students struggled with.

If I find, for instance that over half struggled with topic x but pretty much everyone did OK with topic y, then a solution presents itself: we need to spend less time on topic y and more time on topic x.

Compare that with our ‘real world’ example: I find out what students watch on telly last night and then use this to introduce them to things they might be better off watching.

This is what using data to demonstrate students’ progress looks like: find out what can children actually do, and then show how you have tried to meet their needs.

David Didau is an independent education consultant and writer. He blogs at learningspy.co.uk.